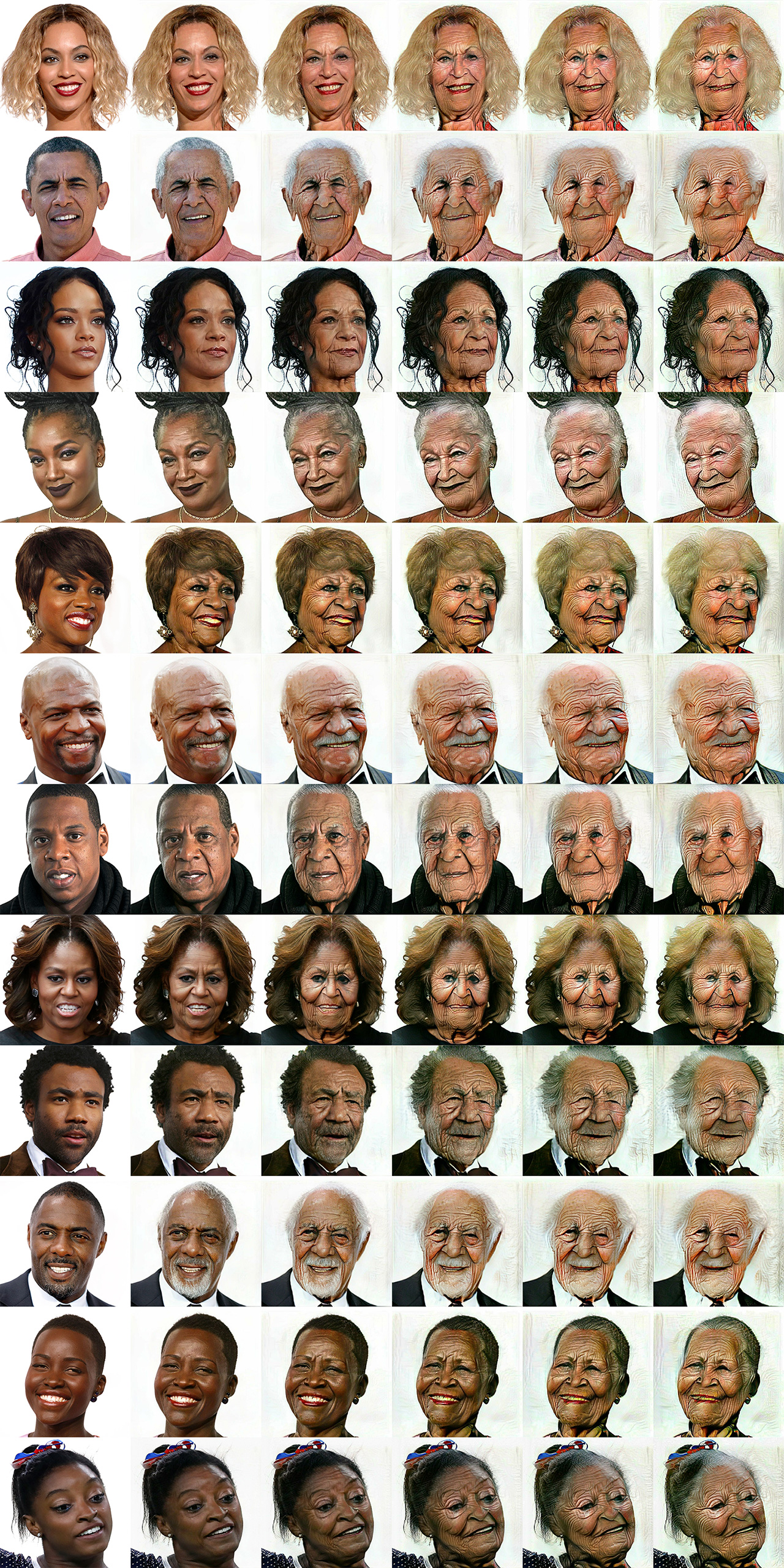

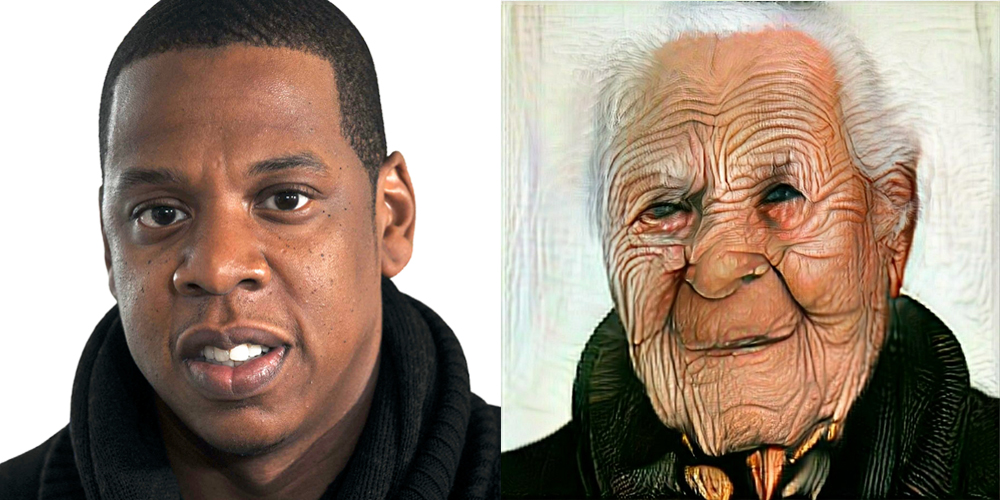

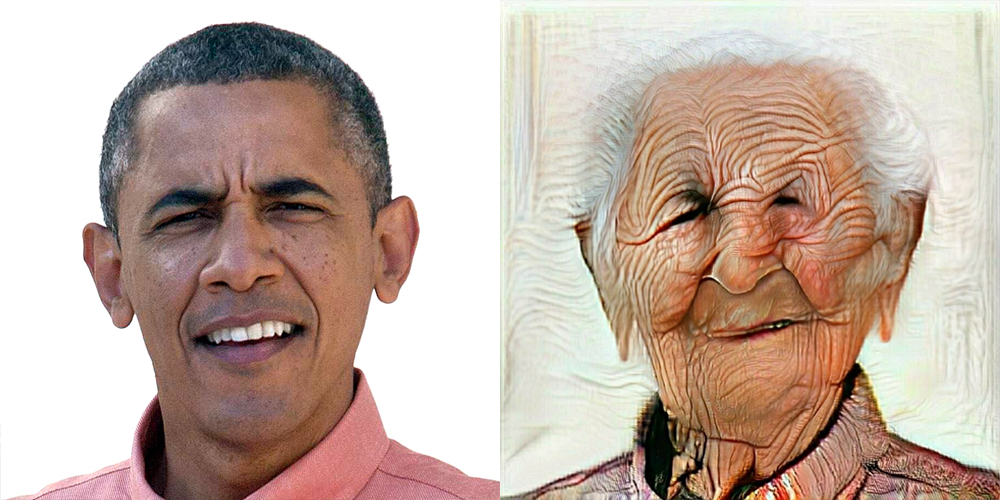

FaceApp, the Russian mobile application that became famous on the internet for creating an “elderly version” of yourself, saw its immense popularity turn into a pile of privacy questions.

There were questions about what data is collected and shared, and fears about a company having a facial database of 150 million people worldwide.

But the company’s questionable practices may stretch beyond that point.